Written by:

Dr. Pronaya Bhattacharya and Dr Pushan Kumar Dutta

Amity School of Engineering and Technology

Amity University Kolkata, India

The future of wireless communication envisions 6G and beyond networks delivering Terabit-per-second (Tb/s) data rates, ultra-low latency (on the order of microseconds), and pervasive intelligence across diverse applications. Standards bodies such as Third Generation Partnership Project (3GPP) and the International Telecommunication Union (ITU) are already studying next-generation communication requirements that surpass the foundational goals of Fifth Generation (5G) networks, that includes-massive connectivity, extreme reliability, and heightened security. However, achieving these ambitious targets brings forth significant technical and operational challenges, namely, Terahertz (THz) frequency bands, while offering abundant spectrum, suffer from severe path loss and atmospheric absorption. Ultra-dense networks, crucial for guaranteed coverage and capacity, risk exacerbating interference and increasing energy consumption. Moreover, advanced use cases like holographic telepresence, immersive extended reality (XR), and remote robotic surgeries require intelligent resource allocation and semantic-level optimization that cannot be realized through traditional rule-based approaches.

In parallel, the evolution of machine learning techniques has led to the emergence of Language Models (LMs), especially the powerful subset known as Large Language Models (LLMs), such as Generative Pretrained Transformers (GPT), Bidirectional Encoder Representations from Transformers (BERT), T5, and others. LLMs are built primarily on transformer architectures, enabling them to understand and generate human-like text with remarkable accuracy. Their potential, however, is not confined to text processing: they can integrate multimodal data (e.g., numerical, textual, and contextual) and make sophisticated inferences. By analyzing large volumes of network logs, channel state information, and even policy documents in natural language, LLMs can holistically manage network resources, predict user behavior, and optimize communications at scale.

Broadly, LLMs can be categorized into encoder-only models (e.g., BERT), which excel at tasks like classification and semantic understanding; decoder-only models (e.g., GPT variants), which are adept at generating content or policy; and encoder-decoder models (e.g., T5), which combine both understanding and generation capabilities. These models can be further specialized or fine-tuned for domain-specific tasks such as semantic compression, predictive beamforming, or autonomous network slicing. In the context of 6G standardization, these capabilities align with the push toward automated, data-driven network orchestration, as highlighted in discussion forums for 3GPP Releases 19 and 20.

LLM Working for 6G Scenarios

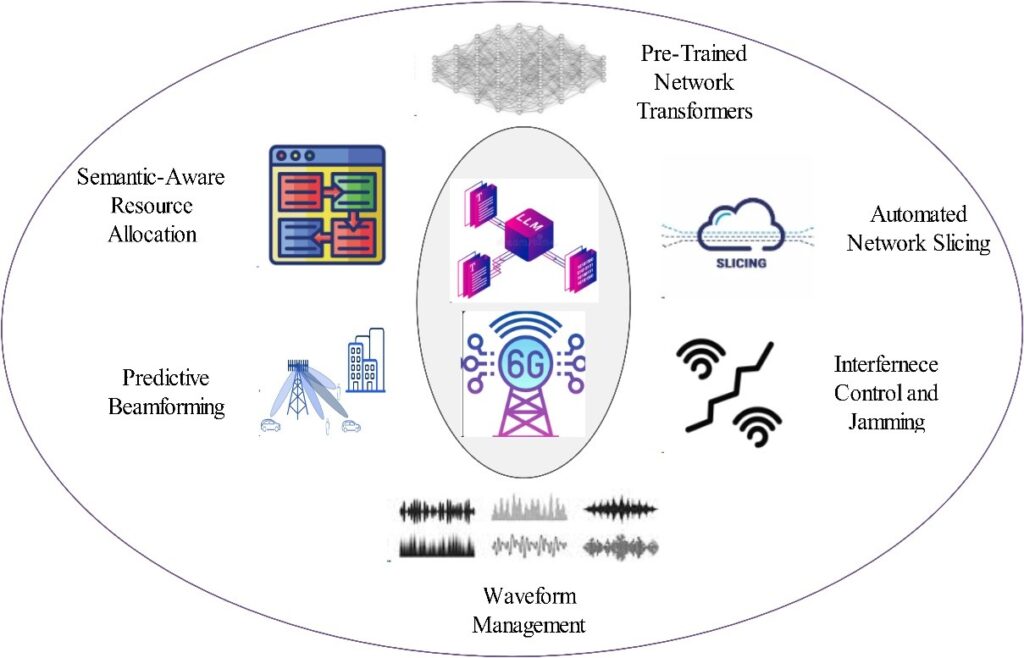

LLMs can address 6G’s hurdles through their unique ability to handle contextual information alongside numeric data. A Transformer-based LLM can, for instance, interpret raw network metrics (e.g., channel state information or throughput logs) in tandem with textual descriptions of network policies or environment conditions. This duality allows for the extraction of deeper insights that were previously inaccessible to purely numeric models.

- Semantic-Aware Resource Allocation

Traditional resource scheduling schemes often focus on maximizing Signal-to-Noise ratio (SNR) or minimizing interference without considering the “meaning” of the data being transmitted. LLMs can parse the semantic relevance of transmitted content—such as critical sensor updates vs. background data—and allocate bandwidth accordingly. For instance, an LLM integrated with the base station’s scheduling module can dynamically prioritize telemedicine video feed packets over routine status messages, thus ensuring better utilization of the limited THz spectrum. - Predictive Beamforming and Mobility Management

High-frequency THz links are sensitive to changes in user location or orientation. By combining

Figure 1: Working scenarios of LLM integration in 6G

real-time sensor data (GPS, accelerometers) and contextual logs (“peak user traffic in corridor A at lunchtime”), LLMs can predict handover requirements and schedule beam switching in advance. This proactive approach reduces packet loss and latency, key performance indicators set out in 6G drafts, and minimizes the overhead associated with frequent beam realignments.

- Network Slicing and Automated Policy Enforcement

6G networks will feature dynamic slices catering to distinct applications—e.g., ultra-reliable low-latency slices for industrial robotics and high-throughput slices for immersive Extended Reality (XR). An LLM can interpret textual Service-Level Agreements (SLAs) and real-time metrics to automatically configure and adapt slices. For example, it can read a policy statement specifying “guaranteed 1 Gbps for AR/VR devices in Zone 3” and then translate that into a slice configuration, adjusting resource allocation based on demand and interference levels. - Anomaly Detection and Fault Diagnosis

Massive-scale 6G deployments produce staggering amounts of operational data—system logs, performance counters, error messages—that can overwhelm human operators. LLMs excel at summarizing unstructured text and numeric data, helping pinpoint anomalies (e.g., sudden throughput drops) and offering likely root causes or recommended fixes in a human-readable format.

Models and Architectures for Specific Use Cases

Building on the general working principles of LLMs, different architectures can be tailored for precise 6G use cases:

- Encoder-Only Models (e.g., BERT Variants)

- Use Case: Semantic compression for massive IoT.

- Working Mechanism: The encoder stage can transform large streams of sensor data into a compressed semantic embedding, ensuring only the most relevant information is transmitted over the 6G link. This approach lowers bandwidth consumption and improves energy efficiency.

- Decoder-Only Models (e.g., GPT-Style)

- Use Case: Proactive resource allocation and traffic shaping.

- Working Mechanism: By generating token-by-token policies, a decoder-only LLM can adapt scheduling decisions in real time. It can, for example, generate a text-like “action sequence” that instructs the scheduler to reconfigure subcarriers or power levels in response to predicted interference or user density.

- Encoder-Decoder Models (e.g., T5, BART)

- Use Case: Multi-faceted network orchestration and anomaly resolution.

- Working Mechanism: Combining the deep comprehension of an encoder with the generative prowess of a decoder, these models can parse complex input data (network states, textual SLAs) and produce orchestrated commands or recommendations. This is particularly useful for large-scale operations like network slicing and policy-based management.

- Multimodal Transformers

- Use Case: Beamforming in THz and millimeter Wave (mmWave).

- Working Mechanism: These models can handle numeric signals (channel state information, sensor readings) alongside textual knowledge (deployment data, interference logs) to produce robust beam configurations. By interpreting and aligning data from various sources, multimodal LLMs reduce the reliance on purely heuristic or table-driven solutions.

Open Issues and Challenges

Despite their promise, LLMs must surmount key obstacles for successful 6G integration:

- Computational Overhead and Latency

Real-time decision-making is paramount in 6G, yet LLM inference can be computationally intensive. Techniques like quantization, pruning, and specialized hardware accelerators like Tensor Processing Units (TPU), or Field-Programmable Gate Array (FPGA) at base stations are indispensable to achieve sub-millisecond response times. - Security and Data Privacy

Large-scale data ingestion for training or fine-tuning an LLM raises privacy concerns. Federated learning, secure enclaves, and differential privacy approaches must mature to ensure compliance with regulatory frameworks (e.g., GDPR) and protect user data from breaches. - Explainability and Trust

Mission-critical 6G applications (remote surgeries, autonomous drones) cannot rely on opaque models. Regulatory bodies and service providers will require interpretable decisions. Developing robust Explainable AI (XAI) tools for LLMs—capable of outlining the reasoning behind scheduling or beamforming decisions—is an open research challenge. - Standardization and Interoperability

Organizations like 3GPP, European Telecommunications Standards Institute (ETSI), and IEEE have begun exploring AI-driven network management. However, forging consensus on LLM-based protocols, message formats, and training pipelines remains a work in progress. Collaborative efforts between industry, academia, and standards bodies are vital for consistent, interoperable solutions.

Conclusion

Large Language Models, underpinned by powerful Transformer architectures, hold immense potential for addressing the multifaceted demands of 6G and beyond networks. By leveraging their ability to interpret both numeric and textual inputs, these models can introduce true intelligence into network operations—enabling semantic-aware communication, dynamic resource allocation, and automated policy enforcement. Although technical hurdles such as computational efficiency, data privacy, and explainability need diligent attention, the integration of LLMs into next-generation standards promises to usher in a new paradigm of communication that is not only faster and more reliable, but also context-aware and self-optimizing. As research continues to refine LLM architectures and adapt them for high-frequency, ultra-low-latency environments, the synergy between deep learning and cutting-edge wireless technology will redefine the very nature of connectivity in the decades to come.