GAN Implementation

Author: Nirbhay Sedha

GAN stands for Generative Adversarial Network, which is a type of deep learning model. GANs consist of two neural networks: a generator and a discriminator. The generator network takes random noise as input and tries to generate realistic data samples, such as images or text, that resemble a training dataset. The discriminator network, on the other hand, is trained to distinguish between real data samples from the training set and the generated samples from the generator.

The key idea behind GANs is that the generator and discriminator networks are trained simultaneously in a competitive setting. The generator aims to generate samples that are realistic enough to fool the discriminator into classifying them as real data. Meanwhile, the discriminator aims to correctly distinguish between real and generated samples.

During training, the generator generates samples, and the discriminator classifies them as real or fake. The discriminator’s feedback is used to update both the generator and the discriminator parameters. This process is repeated iteratively, with the hope that the generator will improve over time and generate more realistic samples while the discriminator becomes better at differentiating between real and generated data.

The training of GANs can be challenging and requires careful tuning of various hyperparameters. GANs have gained significant attention and have been successful in various applications, such as image synthesis, style transfer, text generation, and even video generation. They have the potential to generate new and high-quality data samples, which can be useful for tasks such as data augmentation, artistic creations, and generating synthetic data for training other deep learning models.

Since their introduction, GANs have undergone advancements and variations, such as conditional GANs, Wasserstein GANs, and progressive GANs, to address challenges and improve their performance. GANs continue to be an active area of research in the field of Deep Learning.

POWER AND CAPABILITIES OF GAN NEURAL NETWORK

Data Generation:

GANs have the ability to generate new data samples that resemble a given training dataset. This includes generating realistic images, text, music, and even 3D objects. GANs have been used to create visually stunning and highly detailed images, showcasing the potential of generative models.

Unsupervised Learning:

GANs can learn in an unsupervised manner, which means they don’t require labeled data during training. Unlike many other deep learning models that rely on labeled data for supervision, GANs can learn from unannotated data and capture the underlying structure and distribution of the data.

Realism and High-Quality Output:

GANs have shown the ability to produce incredibly realistic output. Advanced GAN variants, such as Progressive GANs, have demonstrated the generation of high-resolution images that are often difficult to distinguish from real photographs. GANs have also been used to generate convincing human faces, art, and landscapes.

Image Editing and Manipulation:

GANs can be used for image editing and manipulation tasks. By manipulating the latent space representation of GANs, it is possible to perform operations such as image interpolation, style transfer, image morphing, and even attribute manipulation (changing facial expressions, hair color, etc.). This opens up possibilities for creative applications and fine-grained control over generated content.

Cross-Domain Translation:

GANs have the capability to perform cross-domain translation, allowing for the transformation of images from one domain to another. For example, GANs have been used to convert images from day to night, convert sketches to photorealistic images, or even translate images from one artistic style to another.

Data Augmentation:

GANs can generate synthetic data that can be used for data augmentation in training deep learning models. By generating additional training samples, GANs can help improve the robustness and generalization of models, especially when the available labeled data is limited.

Enhanced Understanding of Data Distribution:

GANs provide insights into the underlying distribution of the training data. By training the discriminator network to distinguish between real and generated samples, GANs implicitly learn the characteristics and structure of the data distribution, which can be valuable for analyzing and understanding complex datasets.

Game Theoretic Framework:

GANs are built on a game theoretic framework, where the generator and discriminator networks play a minimax game. This adversarial training process creates a dynamic competition between the two networks, driving them to improve iteratively. The concept of adversarial training has inspired research in other areas of machine learning and has opened new avenues for exploration.

IMPLEMENTATION OF A GAN NETWORK USING PYTHON:

STEP 1. Importing libraries:-

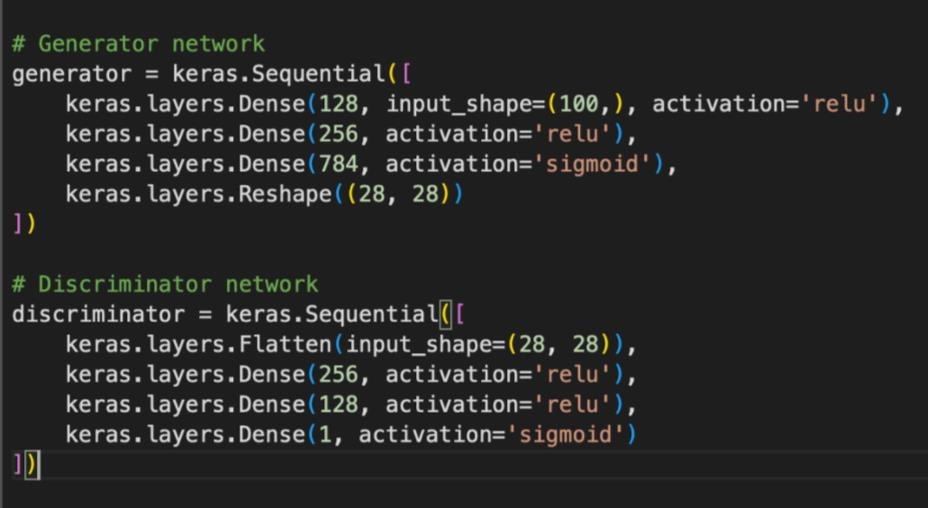

STEP 2. GENERATOR AND DISCRIMINATOR NETWORK

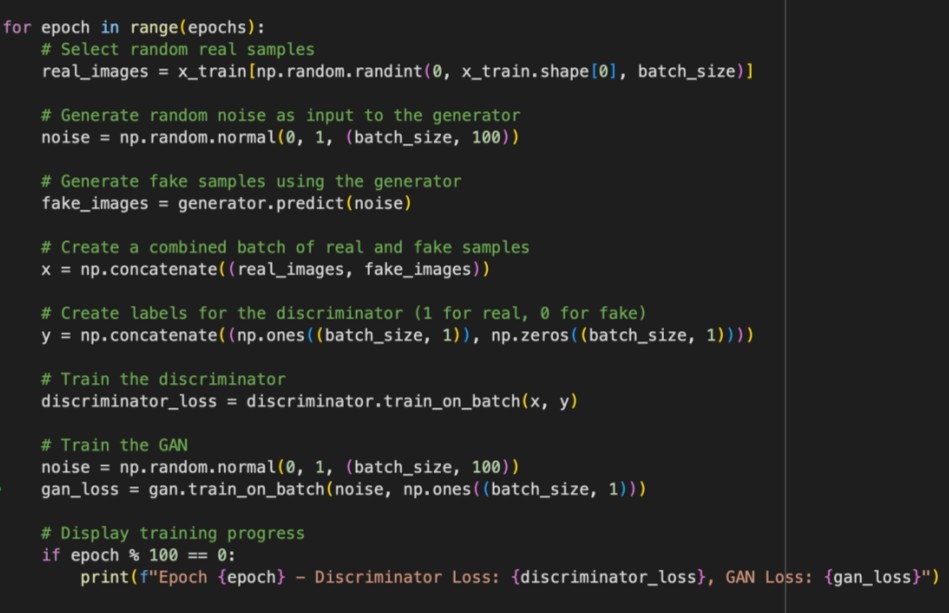

STEP 3. TRAINING DATASET ON GAN NETWORK ( used famous dataset mnist , it contains handwritten images )

STEP 4: IMAGE GENERATION BY GENERATOR AS IT IMPROVE FAKE IMAGES WITH TIME ,AS IT WENT INTO A CONTINUOUS LOOP

USE CASES OF GAN’s :

Generative Adversarial Networks (GANs) have been applied in various domains and have a wide range of use cases. Some notable use cases of GANs include:

Image Synthesis:

GANs can generate realistic images that resemble a given dataset. This has applications in creating synthetic images for training deep learning models, data augmentation, and artistic image generation.

Image-to-Image Translation:

GANs can perform cross-domain image translation, where they can convert images from one domain to another while preserving key features. For example, they can transform images from day to night, convert sketches to photorealistic images, or even change the artistic style of an image.

Text-to-Image Synthesis:

GANs can generate images from textual descriptions. Given a text input, the generator can create corresponding visual representations, enabling applications in text-to-image synthesis, virtual world generation, and visual storytelling.

Video Generation:

GANs have been used to generate new video sequences. By extending the principles of image synthesis, GANs can generate coherent and visually realistic video frames, opening up possibilities for video prediction, video editing, and video synthesis.

Style Transfer:

GANs can separate the style and content of an image, allowing for style transfer between different images. This enables users to apply the style of a famous painting to their own photographs, or to create customized filters and effects.

Data Augmentation:

GANs can generate synthetic data that can be used for data augmentation. By creating additional training samples, GANs can help improve the robustness and generalization of machine learning models, especially when the available labeled data is limited.

#GenerativeAdversarialNetworks, #GANs, #DeepLearning, #ArtificialIntelligence, #MachineLearning, #NeuralNetworks, #DataGeneration, #ImageSynthesis, #GANArchitecture, #GANTraining, #GANApplications